Artificial intelligence has made remarkable strides in recent years, and at the heart of many of its most powerful systems lies a breakthrough architecture: the Transformer. Introduced in 2017, this innovative model has revolutionized how machines understand and generate human language.

From chatbots like ChatGPT to search-integrated AI like Google Bard, Transformers are the driving force behind their fluency, coherence, and contextual understanding. But what exactly is a Transformer? How does it work, and why has it become the foundation for today’s most advanced AI systems?

In this article, we’ll take a deep dive into the Transformer architecture—breaking down its components, understanding how it processes information, and exploring why it has become a game-changer in the world of AI.

Table of Contents

What is a Transformer?

A Transformer is a deep learning architecture introduced in the landmark 2017 paper “Attention is All You Need.” Unlike previous models such as RNNs or LSTMs, Transformers do not process input sequentially. Instead, they use a mechanism called self-attention to understand and represent relationships between elements in a data sequence.

Thanks to this, Transformers can be trained in parallel, more efficiently, and can capture deeper contextual meanings.

High-Level Architecture of a Transformer

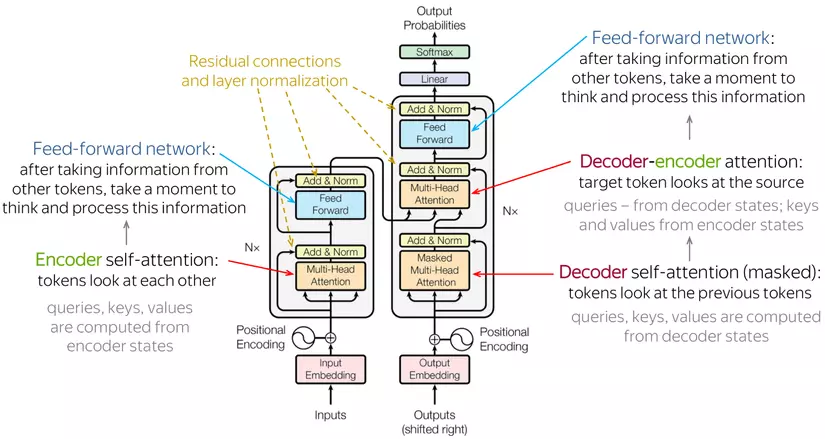

A Transformer consists of two main components:

-

Encoder: Takes the input and converts it into a meaningful sequence of hidden vectors.

-

Decoder: Uses the hidden vectors from the encoder to generate outputs (e.g., translations, sentence generation).

Each component contains stacked layers with two key sublayers:

Multi-Head Self-Attention

This is the core of the Transformer. It allows the model to determine which words in a sentence are important in relation to others. Multi-head attention splits the data into several “heads,” enabling the model to learn multiple types of semantic relationships simultaneously.

Example: In the sentence “Hanoi is the capital of Vietnam,” self-attention helps the model recognize the relationship between “Hanoi,” “capital,” and “Vietnam.”

Feed-Forward Neural Network (FFNN)

After attention is computed, the data is passed through a simple feed-forward neural network to enhance abstraction and non-linearity.

Additional components include:

-

Residual Connections: Shortcut links between layers to preserve information.

-

Layer Normalization: Stabilizes training.

-

Positional Encoding: Adds positional information to inputs since Transformers lack inherent sequence order (unlike RNNs).

Attention – The Heart of a Transformer

The attention mechanism allows the model to “focus” on the most relevant parts of the input. In self-attention, each word is compared with every other word to assess relevance.

The attention formula:

-

Q: Query

-

K: Key

-

V: Value

-

d_k: Dimensionality of the key vector

Using multiple heads enables the model to learn different aspects of the input data simultaneously.

Why Are Transformers So Powerful?

Since their debut, Transformers have become the go-to architecture for modern AI. Here’s why:

Parallel Processing Instead of Sequential

Unlike RNNs and LSTMs that process data step-by-step, Transformers can handle the entire input sequence at once thanks to self-attention. This allows:

-

Significantly faster training

-

Better GPU utilization

-

Easier handling of long sequences

This is one of the main reasons why models like GPT can be trained on billions of words quickly.

Capturing Long-Range Dependencies

Self-attention enables the model to understand relationships between distant words in a sequence—something traditional RNNs struggle with.

Example: In the sentence “The book I read last week was fascinating,” a Transformer easily links “book” with “fascinating” despite the distance between them.

This capability is crucial for tasks such as:

-

Machine translation

-

Question answering

-

Document summarization

Flexible and Intelligent Attention

Self-attention allows the model to prioritize important parts of the input instead of treating all elements equally. Multi-head attention further enhances this by letting the model view the input from different perspectives.

One attention head might learn grammar dependencies, while another learns semantic associations.

Generalization and Scalability

Transformers are highly adaptable—not just for natural language processing, but also for:

-

Computer vision: Vision Transformers (ViT)

-

Biology: AlphaFold for protein structure prediction

-

Audio & Music: Audio Transformers

Their versatility makes them a universal architecture for sequential data.

Large-Scale Training Capabilities

Transformers excel at training on massive datasets with billions of parameters. This scalability has led to the development of powerful language models like:

-

GPT-4 with hundreds of billions of parameters

-

PaLM, LLaMA, Claude AI – all Transformer-based models

Real-World Applications of Transformers

Transformer architecture powers many cutting-edge AI models:

-

GPT (Generative Pre-trained Transformer) – Text generation

-

BERT (Bidirectional Encoder Representations from Transformers) – Deep context understanding

-

T5, RoBERTa, XLNet – BERT enhancements

-

Vision Transformer (ViT) – Computer vision

-

AlphaFold – 3D protein structure prediction

Conclusion

The Transformer is not just a new neural network—it’s a paradigm shift in artificial intelligence. It has redefined how AI processes language, images, audio, and other complex data types.

Understanding the Transformer architecture provides insight into the foundations of modern AI and the technologies shaping our future.